Had an issue with the comment block not showing up for a post, and it turned out the issue was that WordPress let me set both a Page and a Post to the same URL. Note that having the page set as “Draft” didn’t matter.

Bluescreen 0xc00002e2

Judge a smidge of background first. I came in this morning and the Hyper-V guest of our windows server domain controller was getting paused while booting with a disk IO error of some sort inside the VM (there were no error within the cluster itself). It turned out that due to an issue I’m having with the DPM backups that I had a half dozen snapshots stacked up for the server. After merging them all the server then stopped getting paused but then did bluescreen with the error 0xc00002e2 and the Internet was less than helpful.

The error is caused by an issue with the active directory database and running a repair on it helpfully fixed it up. I first booted to the repair mode command prompt and tried to run esentutl /p against ntds.dit, but it failed with the error “Unable to find the callback library ntdsai.dll” and the fix for that was to run the utility from the Windows instance that was being repaired. In this case the repair tool dropped me off to an X:\ drive, but the Windows instance that I wanted to repair was actually the E: drive. So I changed the path to e:\windows\system32 so the command was something like:

cd e:\windows\system32

esentutl /p e:\windows\ntds\ntds.dit

I honestly figured it would fail, but it ran through successfully and the server booted up fine afterwards. Note that I have other DCs for this domain so I didn’t have to worry too-too much about the actual data and state of the DS database since it was just going to plowed over with the objects from one of the other DCs.

Data Loads in CSI/Syteline

Nothing too extraordinary here, lots of “paste rows append” in the grid view, but I’ll touch on a few notes from our experience.

Our first sets of data loaded were the customer and vendor tables. These were sometimes loaded months before go-live to get the different groups used to going into the system to keep the records up to date. That might not be workable if these records change frequently, but even a week ahead of time should be reasonable.

The issue with Customers in particular is that there is no good way to load the ship-tos in an easy, automatic fashion since the ship-to ranking can only be set after the record is loaded. Our workaround for that was to set the records manually, and that’s something most places will only want to do once. Another issue for both customers and vendors is that there is a lot of tuning to the records during testing and it would be difficult to handle that in a coded export.

The setup for Customers has a generous number of areas where addresses and contacts can be added, but for vendors there is just the one record, plus a second record, of sorts, for the remit-to (only one remit-to can be set). This is an intractable issue with the package since expanding Vendors to work similar to customers would require quite the set of database changes. The only thing I will note here is that for both Customers and Vendors we used a truncated version of the customer’s/vendor’s name instead of auto-numbering (CSI doesn’t do a good job of enabling users to easily find names) and we prepended the vendor’s remit-to with ‘ZZ’ to help in more easily differentiating them from the main address.

Items is the a database table that was usually loaded a couple days ahead of time. Note, this burned me every time since production did a poor job of keeping the records up to date, even over the course of 72 hours. I tried to control for this by having my router export program tell me which parts did not have a match in CSI (the router was exported out of the old system, but it couldn’t find a corresponding part in CSI). Even at our smaller operations it took several hours to paste the Current Operations into the system, but a big tip: refresh after each data segment is pasted in. So for instance, I would generally paste 1,000 rows at a time, and after the paste completed and I saved the records (without an error hopefully!) I would refresh the form so that only 200 records were on the form. If the form is not refreshed CSI gets slower and slower as it has to keep track of progressively more and more data.

The last thing I want to touch on is importing WIP value, and importing shop floor transactions: both of these had to be done by hand. Obviously the goal is to “run out” as many jobs as possible to minimize this work, or at the very least, complete/close operations in the legacy system. For the WIP value we issued a fake part to each job that had the current WIP dollar totals for the job (when asked to create the part, just click Cancel). For the shop floor transactions it was as much fun as it seemed: as many people as could be convinced to come in over the weekend would be trained to both key data into Unposted Job Transactions and close out operations on Job Operations. If possible, this process can be helped greatly by having someone from production helping so as to catch last minute operator keying errors.

Redundant Fortigate VPN with Cradlepoint

This post is working from the following assumptions:

- The Cradlepoint devices are using Cradlepoint’s cloud management service. Otherwise the routing between the Cradlepoint and the protected network needs to be set up. I was unable to get this to work, though I didn’t put any time in to it since I didn’t have to get it to work.

- A pseudo “Spoke-Hub” setup, with the redundancy of the hub to the spoke not being of great concern. In this case our hub site is a cloud provider that uses Fortinet’s hosted version of a Fortigate.

- I am not an expert in this field, some of these steps may not be needed or the configuration suboptimal in some ways. I am just relating what worked for me.

The initial setup of the spoke site is a simple site-to-site VPN utilizing static IP addresses at each site:

That link will need to be redone since the new connection at the spoke site will need to be an aggregate VPN and the existing IPsec tunnel cannot be set as an aggregate member. I recommend using the USB configuration load so that if the process goes south, the Fortigate can be rebooted and the old, working configuration reloaded.

First, the Cradlepoint needs to be set to a dedicated interface on the Fortigate. For most of the sites I used Wan2, though at one I had to take a port out of the Lan configuration. Optimally this port will be part of a separate network so that a system can be hooked to other ports in the set for diagnosing subnet specific issues, but, I forget to do that every time. With that set, I set two equally weighted static routes to the static IP address of the Hub, one through the existing gateway, and one through the internal address of the Cradlepoint. (I did this because the only thing I wanted routed over the Cradlepoint was work traffic.)

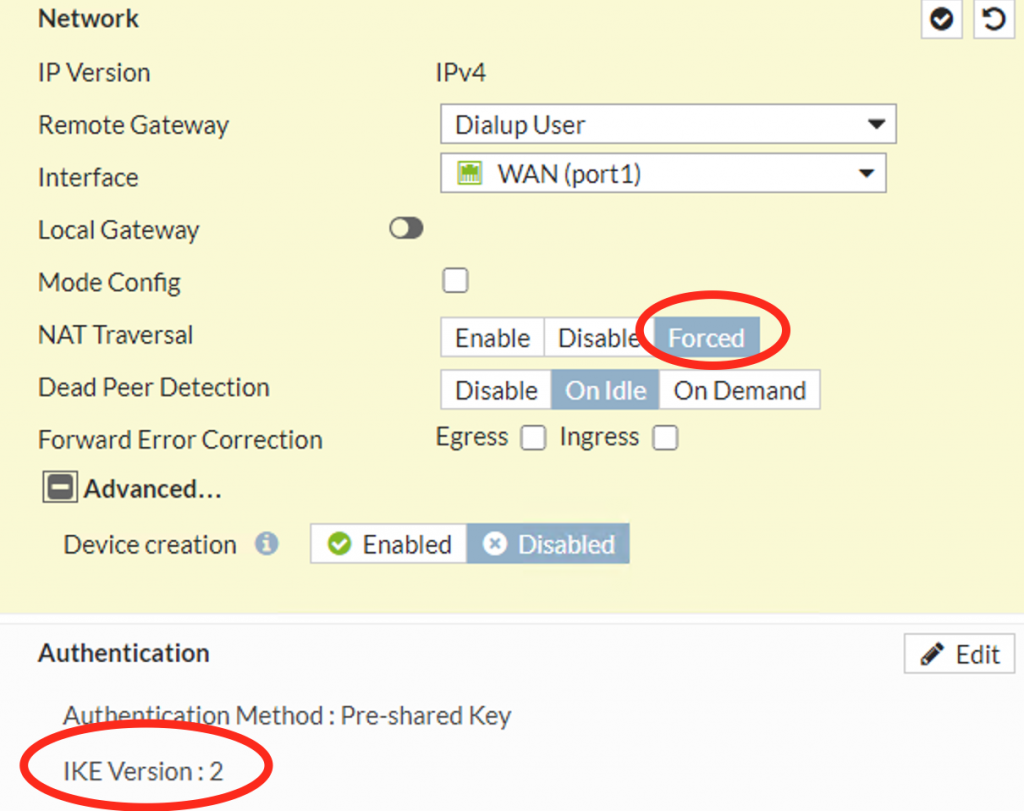

Next, make a new VPN connection at the Hub that will listen for the Spoke connection. This will be a “dial up” VPN connection; I used IKE2 and Forced NAT transversal based on recommendations from Fortinet’s support forum:

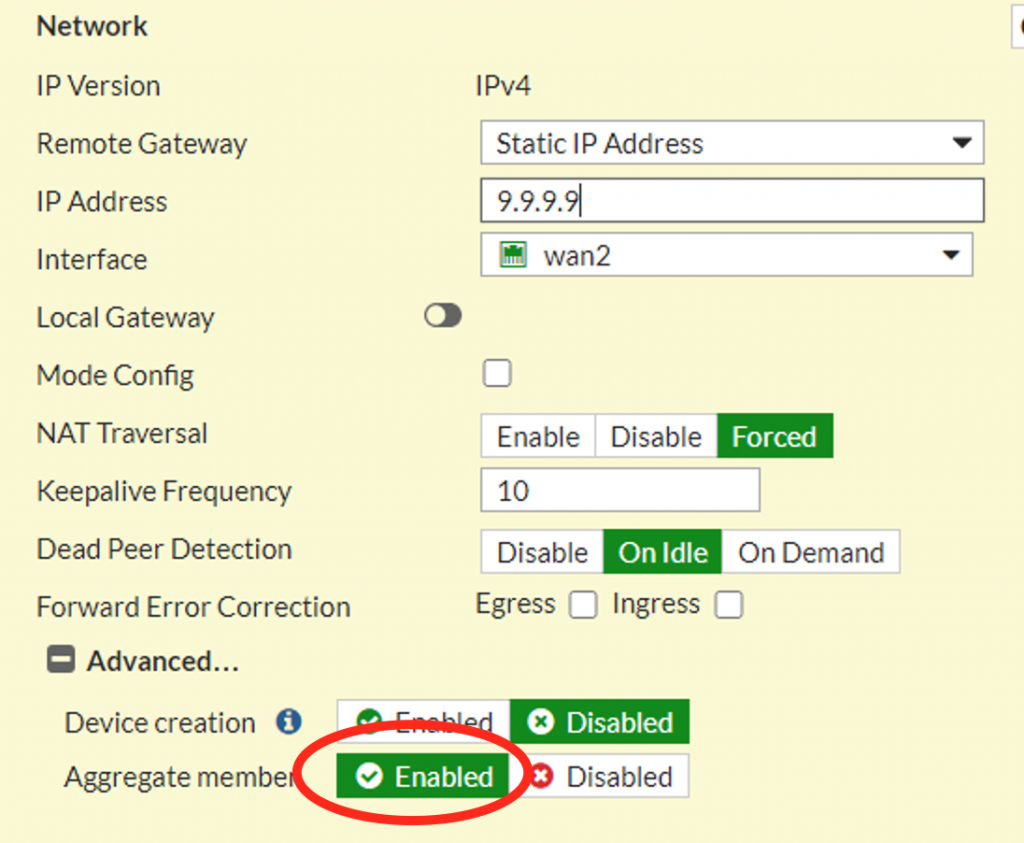

The at the spoke site I then setup a matching VPN connection, being careful to mark as an aggregate member:

Next, setup a new Redundant IPsec aggregate on the Spoke and add the new VPN connection to it. On the Hub site add a new, equally weighted, static route to the Spoke’s network using the new VPN connection made at the hub and add policy rules allowing traffic over it.

So far this has been non-destructive, but the next step will interrupt the connection for a bit, depending on how fast you are. On the spoke, change the default gateway to the hub network from the existing VPN connection to the IPsec aggregate, and then change the policy rules used to allow traffic to (and from) the hub to use the IPsec aggregate as well. At this point, the VPN connection over the Cradlepoint network should come up, if not diagnose and fix the issue (every time I had an issue here it was because my settings in the VPN connections did not match up).

Next, delete the old VPN connection on the Spoke system that went to the Hub network and recreate it, this time as an aggregate connection.

In this next step I usually add the new, re-done, VPN connection to the aggregate and then remove the Cradlepoint aggregate to more throughly test the connection. Once I confirm that it works I add the Cradlepoint back into the aggregate. I then test the redundancy by “breaking” the regular VPN connection at the hub (by changing the passcode, etc.) and the Fortigate should fail-over to the Cradlepoint VPN. When I “un-break” it, it should fail-back (confirm by resetting the statistics on the VPN monitor).

CSI/Syteline Stored Procedure Report

I generally like having custom reports within the system but it can be spendy to do a bunch of customized reports for trivial tasks. One issue I faced was with reports (in version ~9) where the data was good, but the layout wasn’t in a dataview (Vouchers Payable, Aging reports, etc). I could get around this myself by running a query inside of SQL Server Management Studio, but that got me thinking: why not just allow users themselves to execute the stored procedures that generate the data from within Excel? This gets around two issues, first is that it is not a customized report as the user is running the same routine that CSI does and secondly they needed to get it into Excel anyway, so in a way I’m skipping the “middle man”.

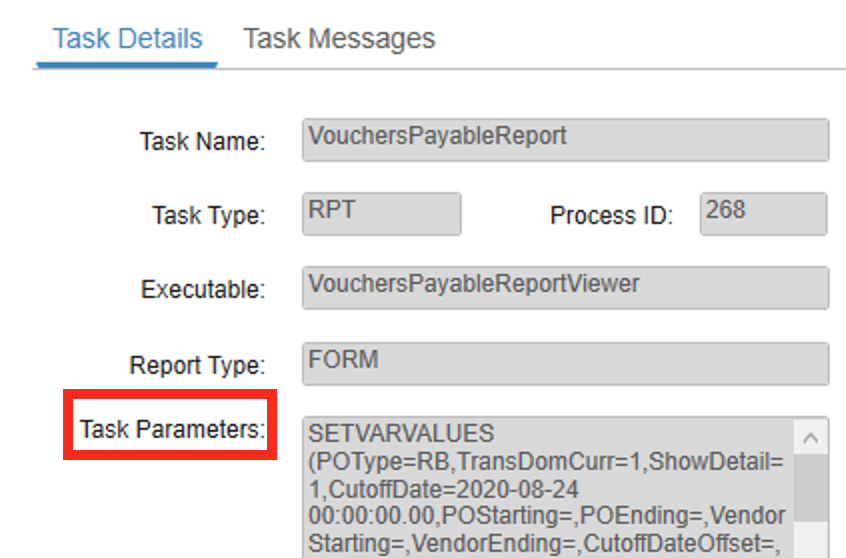

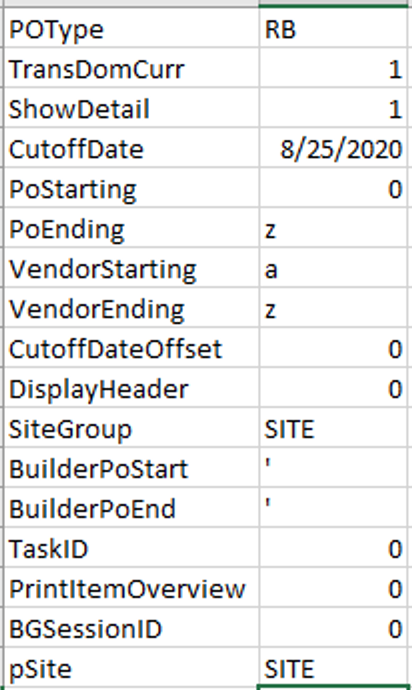

The first thing to do is to collect the list of parameters needed to run the report. This can be gotten rather easily by “printing” the report and then going to Background Task History and pulling the task parameters:

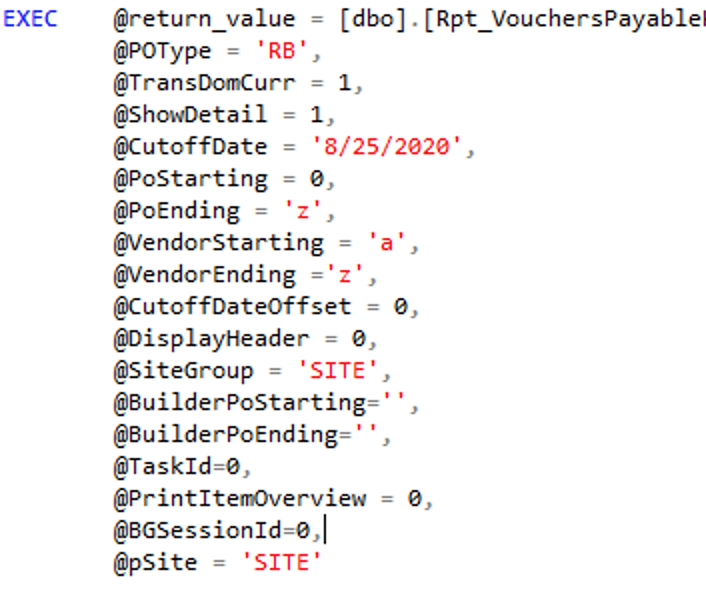

I then make a note of those and execute the stored procedure inside of SSMS using those parameters, but an important note here: do not leave any of the values as “null” as Excel cannot pass null values to the stored procedure (as I am doing it without any code). After some cleanup I have something like this:

This is also good because the parameters are in the sequence needed for Excel, as we’ll see in this next step where we will put the parameters on a separate tab. Although ranges are required for Excel, and it “kinda sorta” doesn’t do nulls, I’d found that putting a double single quote in the cell accomplishes what I need it to (note: shows up as a single ‘ when viewing the spreadsheet):

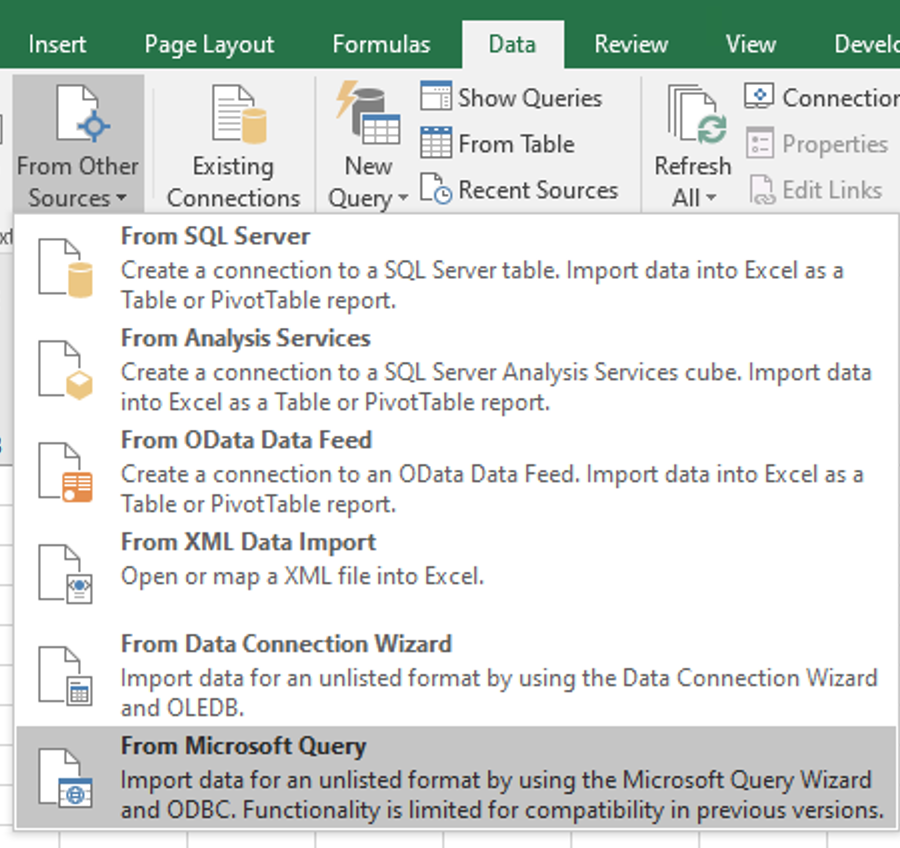

Next we can put the query together in Excel, be sure to use “From Microsoft Query” because otherwise we will not be able to pass parameters (dynamically) to the stored procedure:

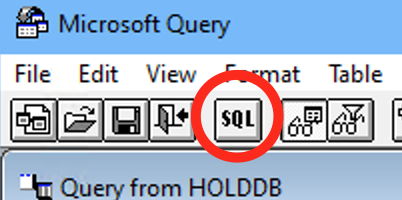

If needed on the next step add a connector to the database server (“New Data Source”), then select the connector and click OK. On the next step click Cancel and then Yes to edit it in Microsoft Query. Click Close and then click the SQL button:

Then click the Definition tab and then Edit Query. The formatting on this can be a smidge tricky since there are multiple parameters, but you’ll want a question mark for each parameter going to the stored procedure, so in this case I’ll enter a query like this:

{call Rpt_VouchersPayableSp (?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?)}After you click OK you will get a warning that it can’t be represented graphically, click OK again and you will be prompted to fill out the parameters, just click OK on all of them (leaving them blank). Even if it comes back with no data that’s okay, just go up to File->Return Data to Excel.

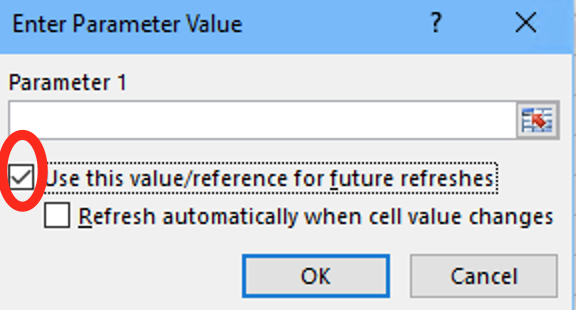

When it goes to Excel it will re-prompt for parameters. In this case, it will more than likely want real data or it will error out and dump the query and you’ll have to restart from the Data Connector step. I will usually key this in and fix it afterward, though you can set it up properly at this step by selecting the cells on the Parameters sheet, just be sure to click ‘Use this value’:

Hopefully that ran through okay, but this then gets into a permission issue that I ran into. For users running these stored procedures the only database access I wanted them to have was to the stored procedures themselves. However, when I tried to run it as the user I would get ODBC errors in Excel and in SQL I would get errors like:

Get DataType failed for StoredProcedureParameter

Property DataType is not available for StoredProcedureParameterThis post put me on the right path. The issue is that many CSI stored procedures have custom data types and the user needs to have View Definition permission to the schema where the data types are scoped to, in this case ‘dbo’. So I give the user Execute permission on the stored procedure and View Definition to the dbo schema and then the user can run the query inside of Excel with minimal permissions.

[Note that some of the data returned by these stored procedures can be ungainly. For the Vouchers Payable report I ended up making a new copy of the stored procedure and cleaning up the outputs. This is rather trivial and the advantage here is that as the stored procedure changes, the Excel output will change accordingly without having to change anything on the spreadsheet].