With our implementation of CSI/Syteline it was important to bring over our work instructions which meant extracting the notes out of the old system and putting them into the new one. The issue here is that there is no real method to do this within the system so direct SQL access is required in order to pull this off.

Please keep in mind that being a SQL DBA is one of my many part time jobs and not a specialty, so some of these steps may not have been as efficient as they could have been.

For our two different production systems the notes were stored using two different ways, though the process for putting them into CSI was basically the same:

- For JDE our notes were integrated into the routing line and due to the structure they required some re-processing. Fortunately these routers were very stable so I didn’t have to worry about a “live migration” of the notes. Using a brief piece of code I extracted the lines and formatted them to Excel (although theoretically the same steps could be accomplished in Excel without using code).

- For our JobBOSS notes I needed to be able to pull these over at or near go-live. This meant that I had to do some very light processing of the note text within Visual Studio.

In CSI there are three tables of importance:

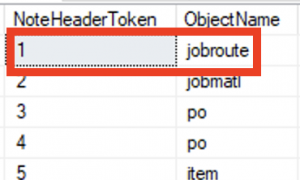

- NoteHeaders – in this table is a list of what “token” goes to which table. Since these are work instructions on the router the notes will be associated with the jobroute table. The important step here is that at least one note needs to be created to generate this entry. There are other possible ways, but this is the simplest method: make a note on an operation line, and then check in the NoteHeaders table to see what number goes to jobroute. The note can then be deleted.

- SpecificNotes – This table will have the note text (and note title) that will contain the work instructions

- ObjectNotes – This table will have the note key associated with the note text in the SpecificNotes table, the table the notes goes to (using the number from the NoteHeaders table) and the row pointer to the record in the table that it is associated with.

A complicating factor for our JobBOSS implementation is that we were required to rework and/or resequence the router lines (for instance something that was “Op100-CNC” in JobBOSS might be “Op80-Mill” in CSI). If nothing was changed on the routers it would be possible to “brute force” the note entry by adding all the notes, pulling the row pointers in sequence and then building a query from that to add the associations in the ObjectNotes table (because if I had 100 router lines, and 100 notes then I’d know that the 50th note in the SpecificNotes table goes to the 50th record in the jobroute table). However due to the changing router I had to handle the notes using the same code in C# in Visual Studio that I was using to put the routers together, since only that code knew what the sequence would be.

The first step then was to extract the router lines from JobBOSS. I used a LINQ query like this:

var operations = from o in jo.Job_Operations

join o2 in jo.Jobs on o.Job equals o2.Job1

join o3 in jo.Materials on o2.Part_Number equals o3.Material1

where o.Status.Trim().Equals("T") && o2.Priority<9 && o3.Status.Trim().Equals("Active")&&o2.Part_Number!=null

orderby o3.Material1,o.Sequence

select new { o.Job,o3.Material1,o.Sequence,o.Description,o.Est_Setup_Hrs,o.Inside_Oper,o.Operation_Service,

o.Run,o.Run_Method,o.Vendor,o.WC_Vendor,o.Work_Center,o.Last_Updated,o.Last_Updated_By,o.Est_Unit_Cost,

o.Note_Text,o.Cost_Unit};

The “where” clause is limiting the jobBOSS jobs to be pulled over to templates (“T”) that go to Active part numbers.

Many, many lines of code later I get to the note section. Due to the nature of the notes tables I was unable to get the code to insert the notes directly into the database and instead had to write out a query out to a text file that I then ran in SSMS. I cycled through each line in the router and wrote out an Insert query for each note:

string sqlCmd = "INSERT INTO[SpecificNotes](SpecificNoteToken, NoteContent, NoteDesc, NoteExistsFlag, " +

"CreatedBy, UpdatedBy, CreateDate, RecordDate, RowPointer, InWorkflow)" +

"values((SELECT ISNULL(MAX(SpecificNoteToken) + 1, 0) from dbo.[SpecificNotes]),'" +

n.NoteText + "','WORK INSTRUCTIONS', 0, 'sa', 'sa', DEFAULT, DEFAULT, DEFAULT, 0);";

I wrote the lines of text out as they were generated and then copied the contents of the resulting file and pasted them into SSMS. Before running it I had to put the text “SET IDENTITY_INSERT SpecificNotes ON” at the beginning and “SET IDENTITY_INSERT SpecificNotes OFF” at the end.

With my notes added to the database I then rerun the code to generate the Current Operations lines. This time, a different piece of code in the routine goes out to find a note that is now in the CSI database that matches the note line held by the object of the Current Operation line:

//get notes from CSI

var snotes = from s in si.SpecificNotes

select new { s.NoteContent, s.SpecificNoteToken };

List<sNotes> sNoteList = new List<sNotes>();

foreach (var s in snotes)

{

sNoteList.Add(new sNotes());

sNoteList.Last().Token = (int)s.SpecificNoteToken;

sNoteList.Last().Note = s.NoteContent.Trim().Replace("'", "''"); ;

}

//Marry the notes in the note DB to the process lines, OYE!

foreach (var n in syteCurrOps2)

{

foreach (var s in sNoteList)

{

if (!s.Used)

{

//Note: essential that the notes read out of the database match the ones inserted (i.e. avoid whitespace issues!)

if (n.NoteText.Trim().Equals(s.Note))

{ n.NoteNum = s.Token; s.Used = true; worker.ReportProgress(i++); break; }

}

}

}

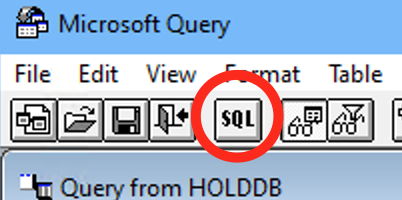

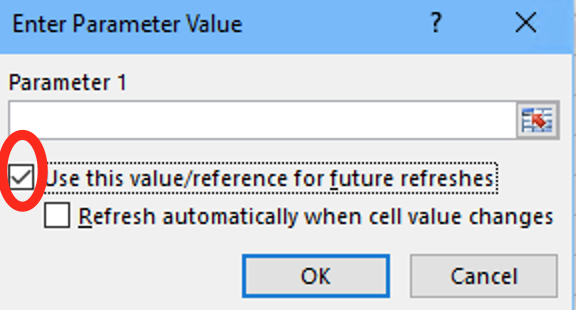

Now that I know which note in the database goes to which router lines, I need a line in the ObjectNotes table to match them up. Restating a key point here, a normal note must be manually added to a Current Operation line so that CSI will know which table the note is associated with. Just add a line and then query the lines in the NoteHeaders table to see what number must be used on the SQL Insert lines for the ObjectNotes table:

In this case in the code I have a number 4 in the insert statement, but I would want to change it to a 1:

//NOTE: change #4# below to the number specified in the NoteHeaderToken field in the NoteHeaders table

// This number is set when the "sample" note was created earlier (which is why a sample note must be created before insert)

string sqlCmd = "INSERT INTO [ObjectNotes](ObjectNoteToken,NoteHeaderToken,RefRowPointer,NoteType,SpecificNoteToken," +

"NoteExistsFlag,CreatedBy,UpdatedBy,CreateDate,RecordDate,RowPointer,InWorkflow)" +

"values((SELECT ISNULL(MAX(ObjectNoteToken) + 1, 0) from dbo.[ObjectNotes]),4,'" + n.RefRow + "',0," + n.NoteNum + ",0," +

"'sa','sa',DEFAULT,DEFAULT,DEFAULT,0);";

sw.WriteLine(sqlCmd);

Just as with the notes query, I write this query list out to a text file so that they can be run in SSMS (and again setting the “SET IDENTITY_INSERT SpecificNotes ON/OFF” lines before/after the query).

The last step is that since all these notes were entered in SQL and not the interface, CSI needs to know which specific Current Operation lines have notes. We do this by setting the bit operator for the NoteExistsFlag field to one. So again, I just dump out a a series of lines to put into SSMS to update all of the lines based on the row pointers for the lines that now have notes:

foreach (var n in syteCurrOps2)

{

if (!n.RefRow.Equals("") && n.NoteNum > 0)

{

string sqlCmd = "UPDATE jobroute_mst SET NoteExistsFlag=1 WHERE RowPointer='" + n.RefRow+ "';";

sw.WriteLine(sqlCmd);

worker.ReportProgress(i++);

}

else if (n.RefRow.Equals("") && n.NoteNum > 0)

logger("Rowless line: " + "Item: " + n.Item + " Seq " + n.Seq);

}

So in the end there are three sets of SQL codes exported, one for each of the two related Notes tables, and one for the table that is having the notes added to it. The last line of code in that snippet logs lines that the code thinks should have a note, but couldn’t find one. This typically happened due to odd characters (‘,”,*, etc.) or white space mismatch issues, but over all it was quite reliable.

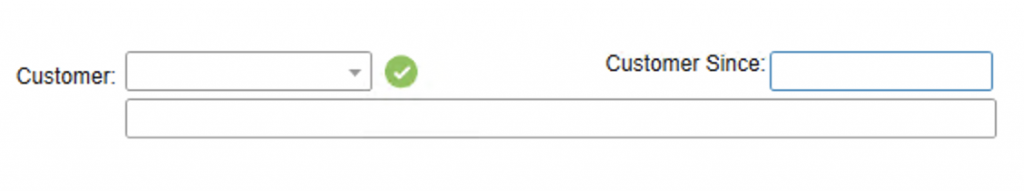

Slightly off topic, but you may see the “worker.ReportProgress” line in some of the code. Due to the fact that some of the queries took a long time to run I had to put my main methods into new threads (multi-threading). Every now and then in the code I put these ReportProgress lines in so that my (very) inexact progress meter would update on the interface to let me know it was running (and to give me some idea of where it was at in the execution process). So under my code for my “export button” I had something like this to launch the Current Operation export process:

//extracts have to launch in new a thread so that the parent thread won't hang and progress can be tracked

worker.WorkerSupportsCancellation = true;

worker.WorkerReportsProgress = true;

if (comboBoxExpType.Text.Equals("CurrentOps"))

worker.DoWork += new DoWorkEventHandler(exportCurrentOps);

worker.RunWorkerCompleted += new RunWorkerCompletedEventHandler(worker_RunWorkerCompleted);

worker.ProgressChanged += new ProgressChangedEventHandler(worker_ProgressChanged);

worker.RunWorkerAsync();